Hello and welcome! I am currently a second-year PhD student at Purdue University, majoring in Electrical and Computer Engineering, advised by Prof. Christopher G. Brinton. Previous to that, I obtained my master’s degree in Electrical and Computer Engineering at ShanghaiTech University under the supervision of Prof. Yong Zhou and Prof. Yuanming Shi. In addition, from Aug. 2022 to Feb. 2023, I served as a research intern in the Optimization for Machine Learning lab at KAUST led by Prof. Peter Richtárik.

Interests

- RL-based Post-training

- Efficient Fine-Tuning of LLM

- Federated Learning

Education

PhD Electrical and Computer Engineering

Purdue University

MS Electrical and Computer Engineering

ShanghaiTech

BS Electrical Engineering

Shanghai University

Selected Works

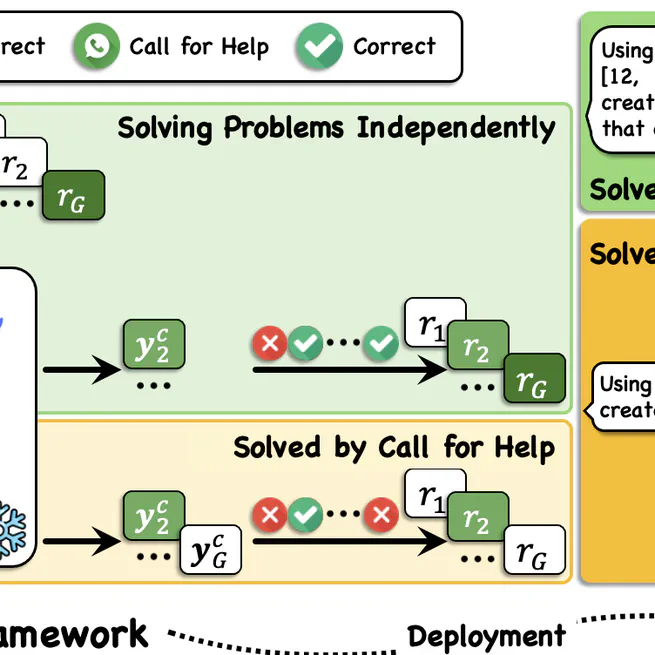

Collaborative Device-Cloud LLM Inference through Reinforcement Learning

Sep 28, 2025

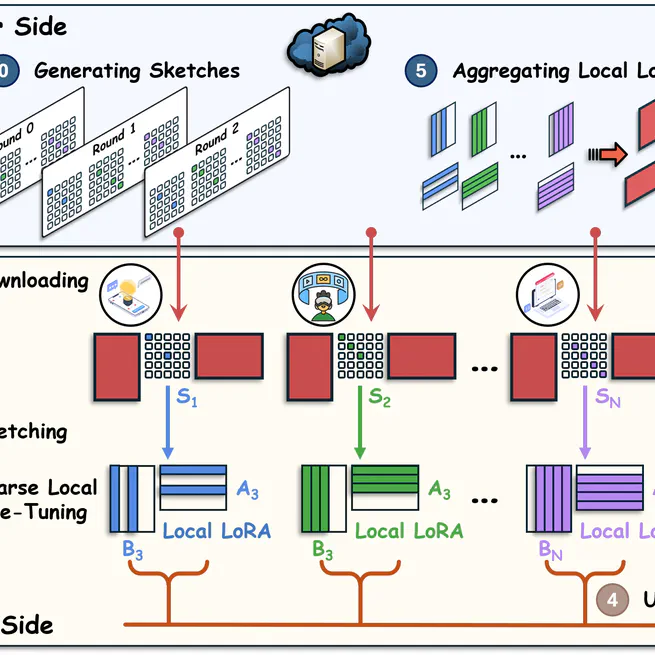

Federated Sketching LoRA: On-Device Collaborative Fine-Tuning of Large Language Models

Jan 31, 2025

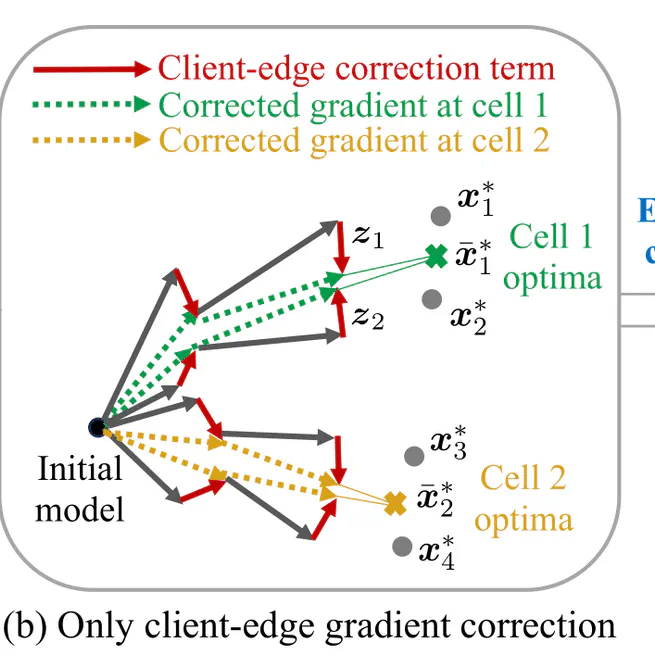

Hierarchical federated learning with multi-timescale gradient correction

Sep 1, 2024

Federated Learning over Hierarchical Wireless Networks: Training Latency Minimization via Submodel Partitioning

Feb 1, 2024

Communication-efficient stochastic zeroth-order optimization for federated learning

Jan 1, 2022

News

I am looking for an internship for 2026.

Academic Services

Conference Reviewer

- Advances in Neural Information Processing Systems (NeurIPS)

- International Conference on Machine Learning (ICML)

- International Conference on Learning Representations (ICLR)

- International Conference on Artificial Intelligence and Statistics (AISTATS)

- IEEE International Conference on Computer Communications (INFOCOM)

Journal Reviewer

- Transactions on Machine Learning Research (TMLR)

- IEEE Transactions on Signal Processing (TSP)

- IEEE Transactions on Wireless Communications (TWC)

- IEEE Transactions on Communications (TCOM)

- IEEE Transactions on Mobile Computing (TMC)

- IEEE Internet of Things Journal (IoT-J)

- IEEE Transactions on Machine Learning in Communications and Networking (TMLCN)