- RL-based Post-training

- Efficient Fine-Tuning of LLM

- Federated Learning

PhD in ECE Department

Purdue University

MS in ECE Department

ShanghaiTech

BS in EE Department

Shanghai University

My work focuses on LLM post-training and Multi-agent LLM systems:

- RL-based Post Training, developing reinforcement learning frameworks for adaptive control and collaboration of multi-agent LLM systems.

- Efficient On-deive LLM fine-tuning, enabling distributed on-device LLM fine-tuning under computation, communication, and memory constraints.

- Federated learning and optimization, designing efficient and convergent algroithm for distributed model training.

PhD, Purdue University — Electrical & Computer Engineering (Aug 2023 — Present)

Working on LLM post-training and reasoning, with an emphasis on RL-based alignment, agent collaboration, and communication-efficient fine-tuning for distributed on-device LLM systems.

Research Intern, Optimization for Machine Learning Lab, KAUST (Aug 2022 — Feb 2023)

Working on optimization theory and its applications on machine learning, especially primal-dual algorithm.

MS, ShanghaiTech University — Electrical & Computer Engineering (2020 — 2023)

Working on optimization theory and its applications on communication system.

- Feb 2026: Ou work on DA-GRPO, a budget-aware reinforcement learning framework enabling continual learning in device–cloud LLM systems is now on ArXiv.

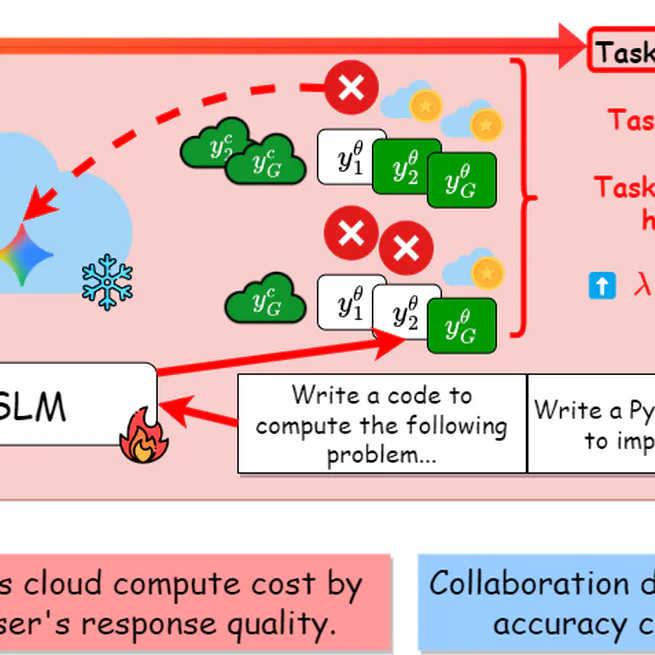

- Sept 2025: Our work on device–cloud collaborative LLM reasoning, introducing an RL-based unified framework for adaptive routing and post-training is now on ArXiv.

- Jan 2025: Our work on Federated Sketching LoRA (FSLoRA), a communication-efficient framework for collaborative on-device LLM fine-tuning under heterogeneous resource constraints is now on ArXiv.

- Sept 2024: Our paper on hierarchical federated learning with multi-timescale gradient correction (MTGC) has been accepted to NeurIPS 2024.

- Aug 2023: Joined Purdue as a PhD student after completing my MS at ShanghaiTech.

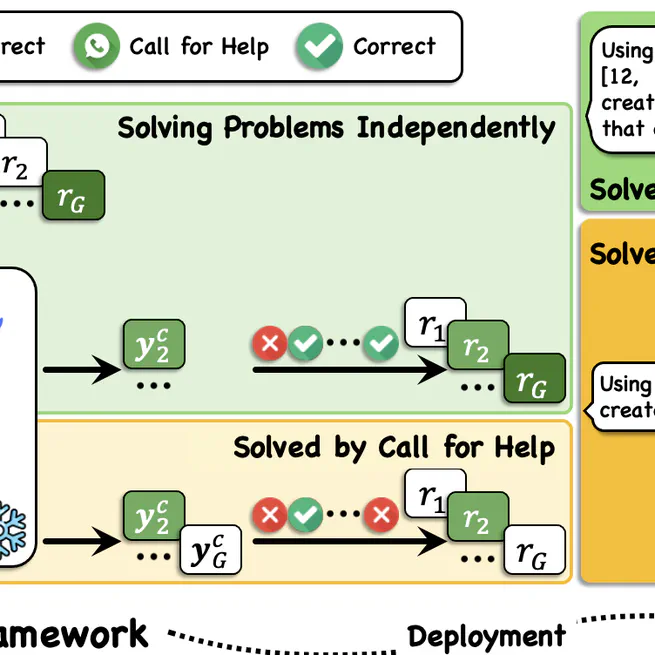

Joint Continual Learning of Local Language Models and Cloud Offloading Decisions with Budget Constraints

Continual post-training framework (DA-GRPO) that jointly learns on-device SLM policies and budget-aware cloud offloading decisions to reduce forgetting while respecting assistance constraints.

Bridging On-Device and Cloud LLMs for Collaborative Reasoning: A Unified Methodology for Local Routing and Post-Training

RL-based framework that enables on-device LLMs to decide when to invoke cloud models, jointly learning routing and post-training to balance accuracy and compute.

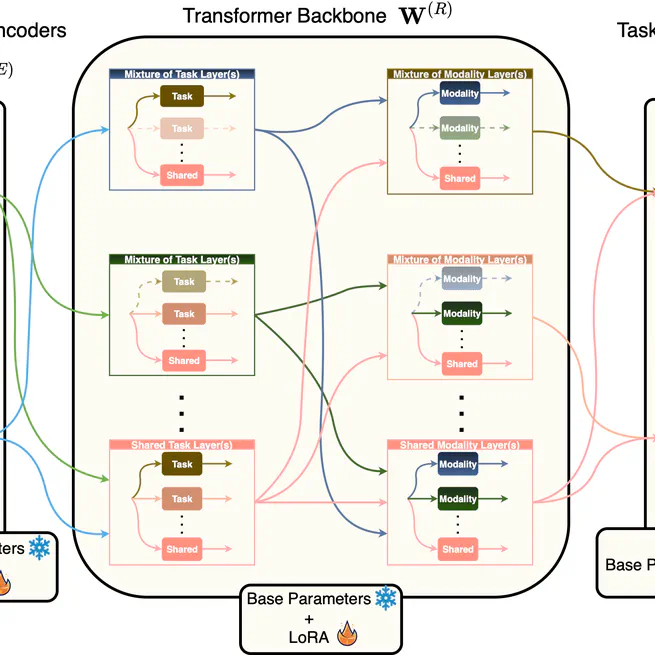

TAP: Two-Stage Adaptive Personalization of Multi-task and Multi-Modal Foundation Models in Federated Learning

Personalization algorithm for multi-task, multi-modal foundation models that combines architecture-aware replacement with post-FL knowledge distillation.

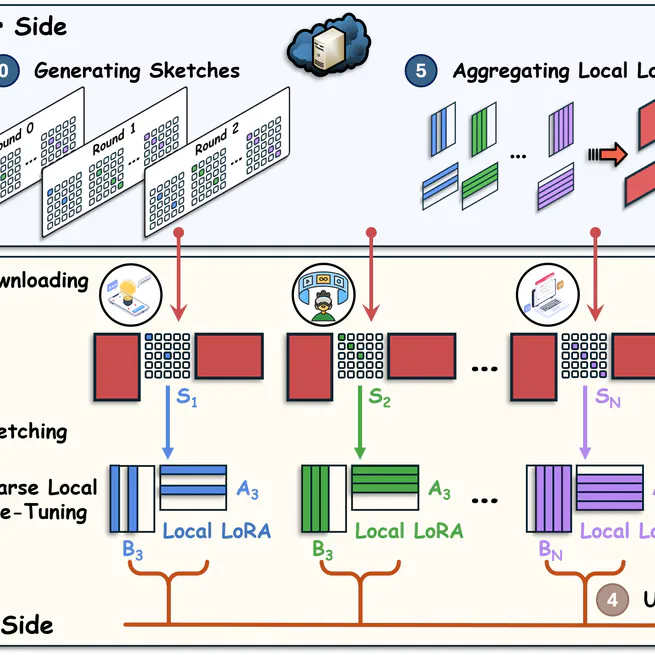

Federated Sketching LoRA: On-Device Collaborative Fine-Tuning of Large Language Models

Communication-efficient framework that uses sketching to adapt LoRA ranks per device, enabling collaborative on-device LLM fine-tuning under heterogeneous resources.

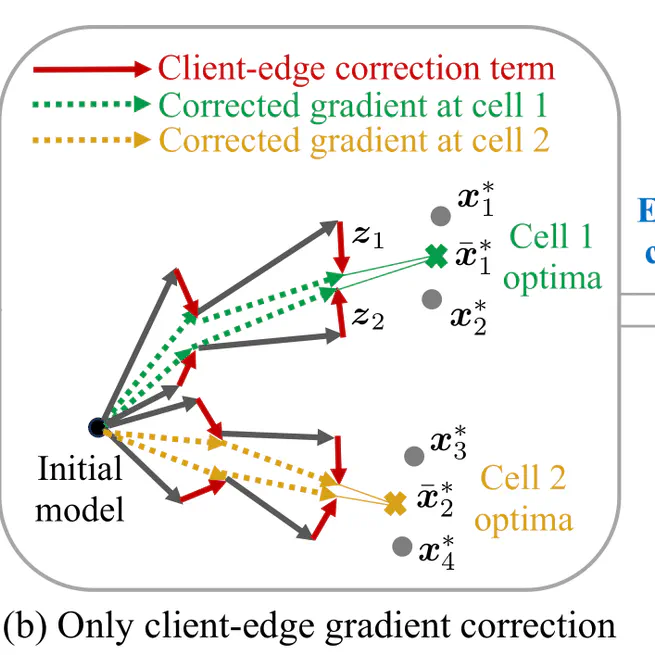

Hierarchical Federated Learning with Multi-Timescale Gradient Correction

Multi-timescale gradient correction method that stabilizes hierarchical FL across client and group levels, with convergence guarantees robust to data heterogeneity.

Federated Learning over Hierarchical Wireless Networks: Training Latency Minimization via Submodel Partitioning

HIST framework that partitions large models into submodels across cells to reduce computation, storage, and communication while meeting convergence guarantees.

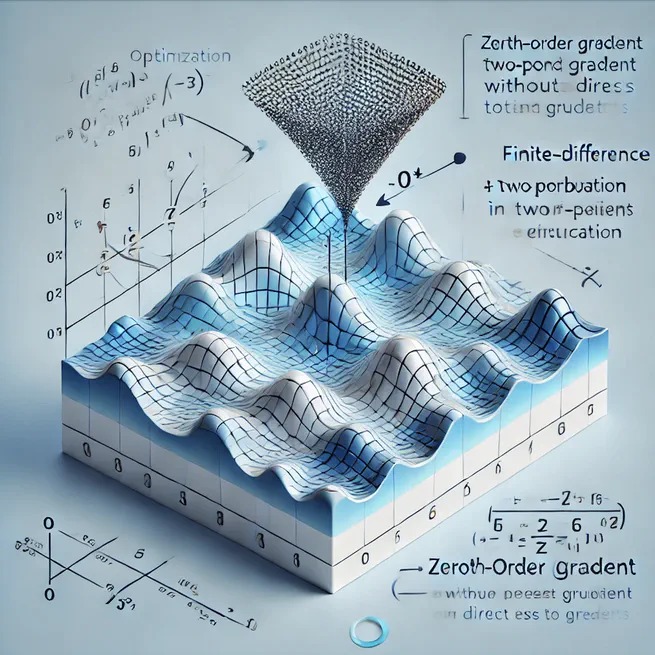

Communication-efficient stochastic zeroth-order optimization for federated learning

FedZO algorithm for derivative-free federated optimization, with AirComp-assisted variant to preserve convergence under noisy wireless aggregation.

Conference Reviewer

- Advances in Neural Information Processing Systems (NeurIPS)

- International Conference on Machine Learning (ICML)

- International Conference on Learning Representations (ICLR)

- International Conference on Artificial Intelligence and Statistics (AISTATS)

- IEEE International Conference on Computer Communications (INFOCOM)

Journal Reviewer

- Transactions on Machine Learning Research (TMLR)

- IEEE Transactions on Signal Processing (TSP)

- IEEE Transactions on Wireless Communications (TWC)

- IEEE Transactions on Communications (TCOM)

- IEEE Transactions on Mobile Computing (TMC)

- IEEE Internet of Things Journal (IoT-J)

- IEEE Transactions on Machine Learning in Communications and Networking (TMLCN)